Prove Your Range

From Near to Far Transfer of Knowledge

“Probably the single biggest challenge that you see in the science of learning is a problem of transfer, by which I mean you learn something in one context, typically in a classroom, and then you fail to use it when it’s appropriate in different contexts.”

—Stephen Kosslyn, former Dean of Harvard’s Faculty of Arts and Sciences

One of my favorite nonfiction books is David Epstein’s Range: Why Generalists Triumph in a Specialized World. I give it out during the holidays to friends and family. At a certain point while I was writing my book, Long Life Learning, I had to stop quoting from Range. It was getting a little out of hand.

The concept of range refers to deep analogical thinking, or “the practice of recognizing conceptual similarities in multiple domains or scenarios that may seem to have little in common on the surface.” Range is a kind of flexibility in our knowledge structure that enables us to transfer concepts from one domain to another.

Unfortunately, range isn’t facilitated naturally through our industrial model of education, which prioritizes progress over fixed periods of time. Whether or not we have gaps in our understanding, we advance together as a class from year to year. And most of the content we absorb in school is a form of what mathematician and philosopher Alfred North Whitehead called “inert knowledge,” the kind of knowledge and theory that disappears rapidly from our brains.

Here’s what inert knowledge looks like: Returning students from two different cohorts at Lawrenceville Academy in New Jersey were asked to take the same science final they had taken just three months earlier. The average grade of B+ plummeted to an F. The students hadn’t retained the major concepts they had supposedly mastered just a few months prior. That is the fleeting nature of inert knowledge.

The deeper kind of learning that we should aspire to, however, can often look like failing initially because deep learning involves struggle, not ease of mastery. And that kind of struggle doesn’t show up well on tests. Epstein synthesizes a huge body of work by cognitive psychologists that demonstrates that lasting knowledge and better performance later in life comes with poor performance in the near term. Deep learning can look and feel slow.

These concepts have shaped the way I parent. My kids are 10 and 12, and they’ve already had to take a fair number of assessments in school. When they get anxious or nervous about the next round of MCAS, as an example, I try to assure them that these tests don’t measure what matters most. My daughter refuses to believe me when I tell her that I don’t care what she scores on her tests. How can she when the system around her sends her a very different message?

Harvard Professor Eric Mazur, whom I featured in a previous blog, puts it more bluntly:

… in education, starting shortly after kindergarten, it becomes a completely individual isolated affair. Yes, you may go to a class and sit there with 20 or 200 or 700 other people, but you’re sitting next to them and you’ve got to listen to the professor. So you’re not interacting, you might as well be alone.

And then you go and study alone and then you go to a room where you have to leave your cell phone and your smartwatch, and the nearest person has been separated by at least six feet from you. You’re not allowed to bring any device that lets you communicate or any book or any notes or anything, and you have to take an exam. And you’re evaluated and assessed individually.

And then we send people into society to take on jobs where they work together, and they solve problems together that no one individually could solve. And they have constant access to information. All right. Putting those two pictures next to each other shows you there’s something really, really, really, really, really, really, really, really wrong with education.

Education is highly individualistic. And as Google Education Evangelist Jamie Casap likes to say, we teach “kids that collaboration is cheating.” We also cordon them off from access to content readily available anywhere on a laptop or phone because we still prioritize the acquisition of specific content. And we continue to silo that content into separate and specific disciplines.

Salman Khan, the founder of Khan Academy, calls this the balkanizing or ghettoizing of subjects. We teach narrow forms of intelligence—raising learners to think that literacy is separate from numeracy and science and social studies, or that problems are always domain-specific. We learn how to solve one problem as a math problem or a biology problem or an economics problem divorced from its historical context and its connections to anthropology, policy, or literature.

At one "future of higher education” conference, I asked the head of computer science at a prestigious public university sitting next to me whether his school would embed more ethics courses into their engineering curriculum. He laughed and shared that the faculty had tried to, but the students pushed back vigorously—refusing any extra requirements on top of their existing load. He said: The students couldn’t see the relevance of ethics for the kind of work they were trying to accomplish.

I was stunned by that response; it revealed just how much we have managed to train our students to believe that laser-focus specialization is the key to success when the opposite is true. With a greater emphasis on ethics, judgment, futures thinking and decision making, those engineers could better grasp the first-, second- and third-order ramifications of solutions they were putting into the world. In a way that machines simply cannot replicate, they could evaluate and interrogate signals, extrapolate forward, and adjust or modify their models and algorithms.

For me, the question becomes: What are the core skills that we must cultivate to become the best problem solvers? How do we set up knotty and wicked problems so that learners can grapple with and hone the skills that generative A.I. cannot bring to the table?

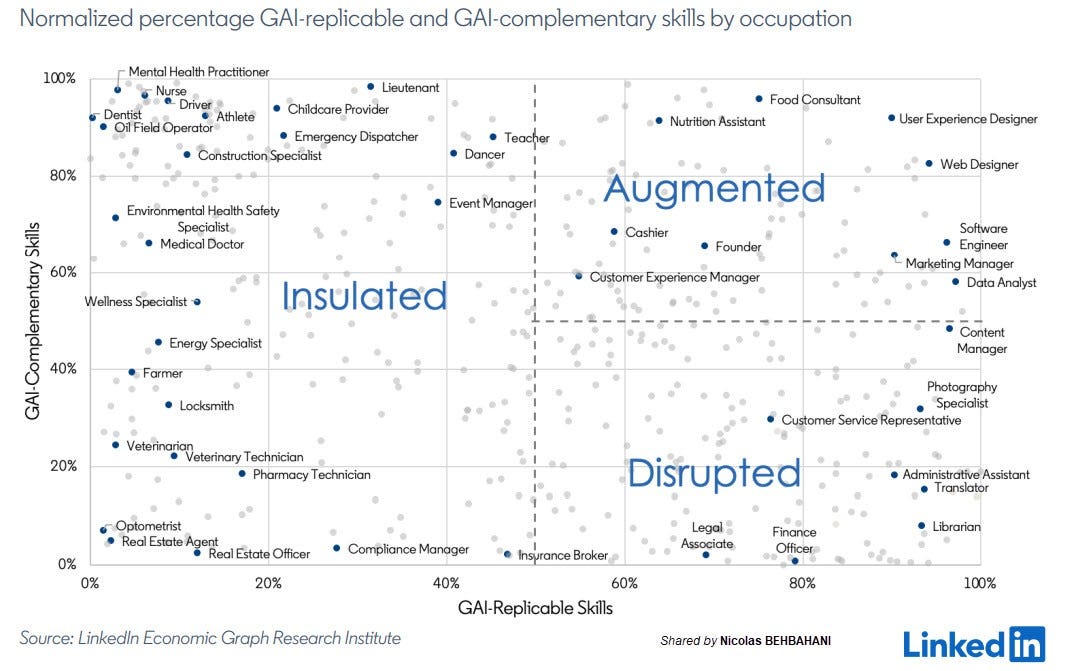

LinkedIn already estimates that generative A.I. is poised to affect at least a quarter of the skills of 85% of all of its members. In a fascinating visualization, the company also suggests the various ways every job will be affected.

I think if we reflect on our educational curricula, we are not preparing learners in a deliberate way for the true core skills required in each of these domains. The evolution of the internet and smartphones wasn’t enough to move our K-12 and postsecondary systems to problem-based or inquiry-based learning. We’ve clung to our lectures, credit hours, and departments. Yet I hope that the sheer, unrelenting, and obvious power of generative A.I. finally pushes us to reimagine the ways we construct learning experiences.

We know, for instance, that at least for now, LLMs do not excel at futures thinking or extrapolation, ethical judgment, active tasks, complex interpretations, and hypotheticals. The emergence of this technology should force us to pose questions and problems to our learners that can’t be cheated on.

If we do this right, learners will have to become problem solvers whose habits of mind stretch across disciplines and systems. They’ll have to show their agile and adaptive thinking by connecting ideas from one domain to another.

Learners will have to demonstrate their range.